Imagine, if you will, an antivirus test taking place simultaneously in parallel universes, each differing from the others only by which product is being tested. This sort of quantum-computing test methodology is only available in the Twilight Zone, alas, but the researchers at Dennis Technology Labs come about as close to that ideal as possible. They capture the contents of real-world malicious sites and then use a replay system so each tested product encounters the malware attack in the exact same way.

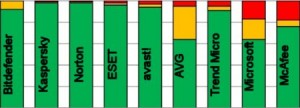

In the latest test, Kaspersky Internet Security (2013), Norton Internet Security (2013), and Bitdefender Internet Security 2013 rated AAA, the top rating.

Scoring Protection

Each product earns points for correctly detecting and preventing malware attacks, and loses points for failing to do so. Defending the system by completely preventing malware from launching earns three points. Neutralizing the attack after launch and fully reversing its effect is worth two points. Terminating the malware without fully cleaning up gets just one point. A product that fails to detect the malware or that tries and fails to prevent system compromise loses five points.

Researchers tested with 100 different real-world malware samples, so scores could range from 300 to negative 500. With 291 points, Norton edged out Kaspersky by a single point to take the top spot. At the bottom were Microsoft Security Essentials and McAfee Internet Security 2013, scoring 127 and 144 points respectively.

Looking at things another way, Bitdefender actually won this challenge, because it detected every attack and either defended or neutralized them all. Kaspersky and Norton missed a couple, but earned higher scores by fully defending against almost every detected threat.

False Positives

In addition to testing real-world defense against malware attack, the researchers also worked to verify that these security products correctly refrained from blocking legitimate programs, or reporting them as suspicious. Each product starts with a perfect 100 false positive points. Blocking a legitimate program costs anywhere from 0.1 point (for a very low impact program) to 5 points (for a very high impact program). The “fine” for reporting a legitimate program as suspicious is half that amount.

Microsoft and McAfee did much better in this test, both earning a perfect 100. With 75 points, ESET Smart Security 6 had the lowest score. Norton’s 90 points put it second-lowest.

Overall Accuracy

Adding the scores for protection and false positives gives us the product’s total accuracy, with a theoretical range from 400 points down to negative 1,000. All three of the AAA-rated products scored over 380 points. avast! Free Antivirus 7 rated AA, with 366 points. The other free antivirus tested, AVG Anti-Virus FREE 2013, earned a C rating, due more to poor protection than false positives. In between those two, ESET and Trend Micro Internet Security 2013 rated a single A.

As for McAfee and Microsoft, their stellar lack of false positives couldn’t overcome their poor performance in the protection test. Neither received certification even at the C level, though Microsoft scored 100 points more than in the previous test by Dennis Technology Labs. That’s a steady improvement by Microsoft, given that in the test before that it earned a below-zero score.

I’m highly impressed with this lab’s testing methodology. I only wish they’d put a wider range of products to the test. If you’d like to dig deeper, the very detailed full test report is available on the Dennis Technology Labs website, along with parallel reports on SMB and Enterprise security products.